Co-Authored by: Giannis Prokopiou, Panagiotis Kyrmpatsos

Chatbots are everywhere, and creating them efficiently is key. One important aspect of chatbot design is persistence. While saving data locally can work for small systems, a better solution for production-level applications is to use a database, like PostgreSQL.

If you’re looking to use PostgreSQL for chatbot memory, there’s a package available to help with that: LangChain’s Postgres Memory. It provides a reliable way to store chatbot memory.

LangChain also offers a step-by-step tutorial on using PostgreSQL for persistence: LangGraph PostgreSQL Tutorial.

This post expands on that, showing how to integrate PostgreSQL memory into an application using FastAPI.

😼The Github Repository can be found here:

Why Use FastAPI and PostgreSQL?

FastAPI is a modern web framework known for its speed and efficiency, while PostgreSQL provides reliable memory persistence, allowing the chatbot to remember past interactions and improve responses over time.

Challenge

How can we keep the connection between the database and FastAPI seamless in an async setup?

Tech Stack

- FastAPI — Web framework for API development

- PostgreSQL — Database for memory persistence

- Docker & Docker Compose — For containerized deployment

- LangGraph & LangChain — To create agentic chatbot workflows

Step 1: Setting Up the FastAPI Project

First, create a project and install dependencies (example below):

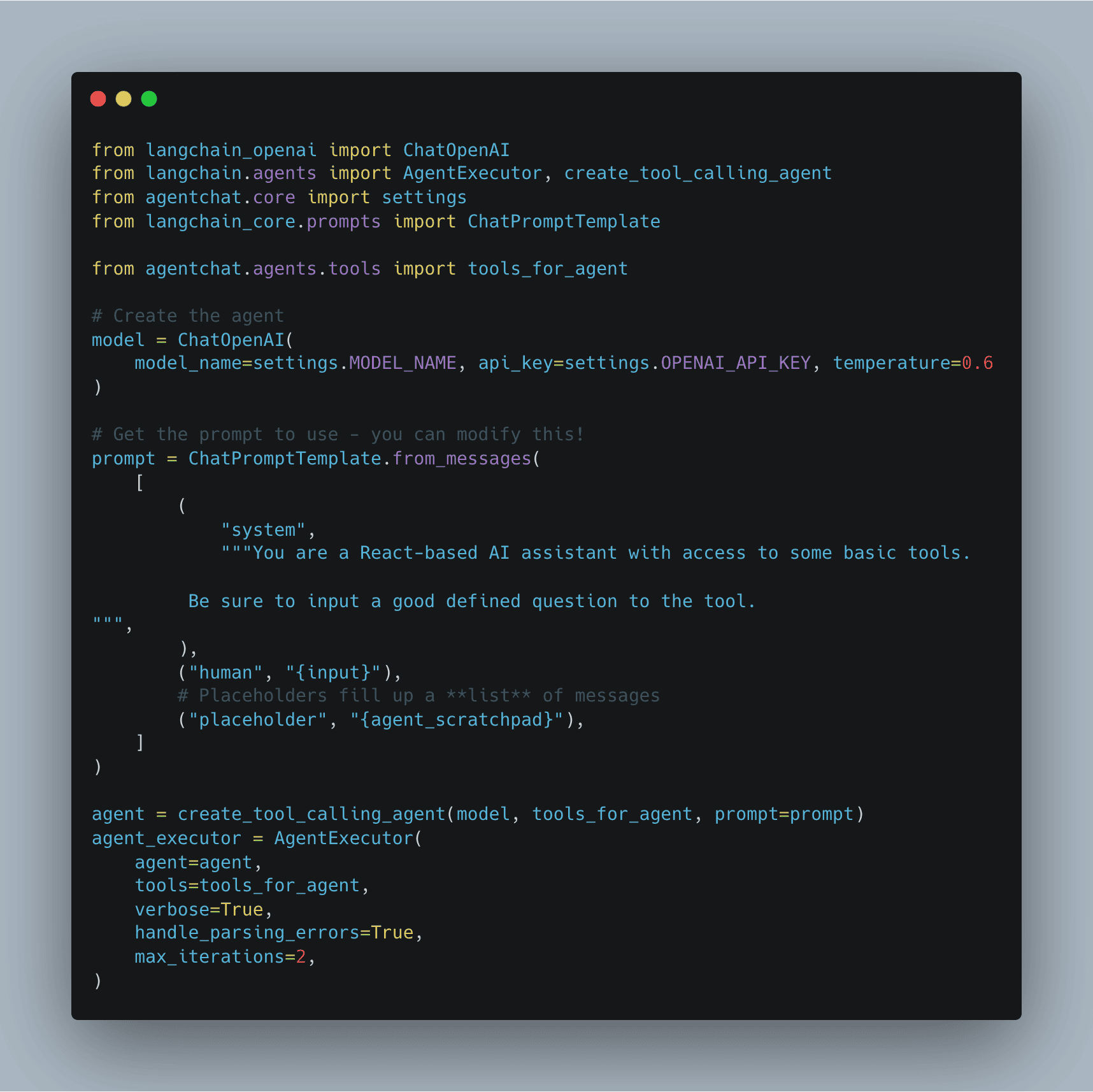

Define the Agent

In this example, we will create a simple agent that uses a simple tool that does multiplication.

First we define the tool:

Then we can define a simple agent using Langchain-agents:

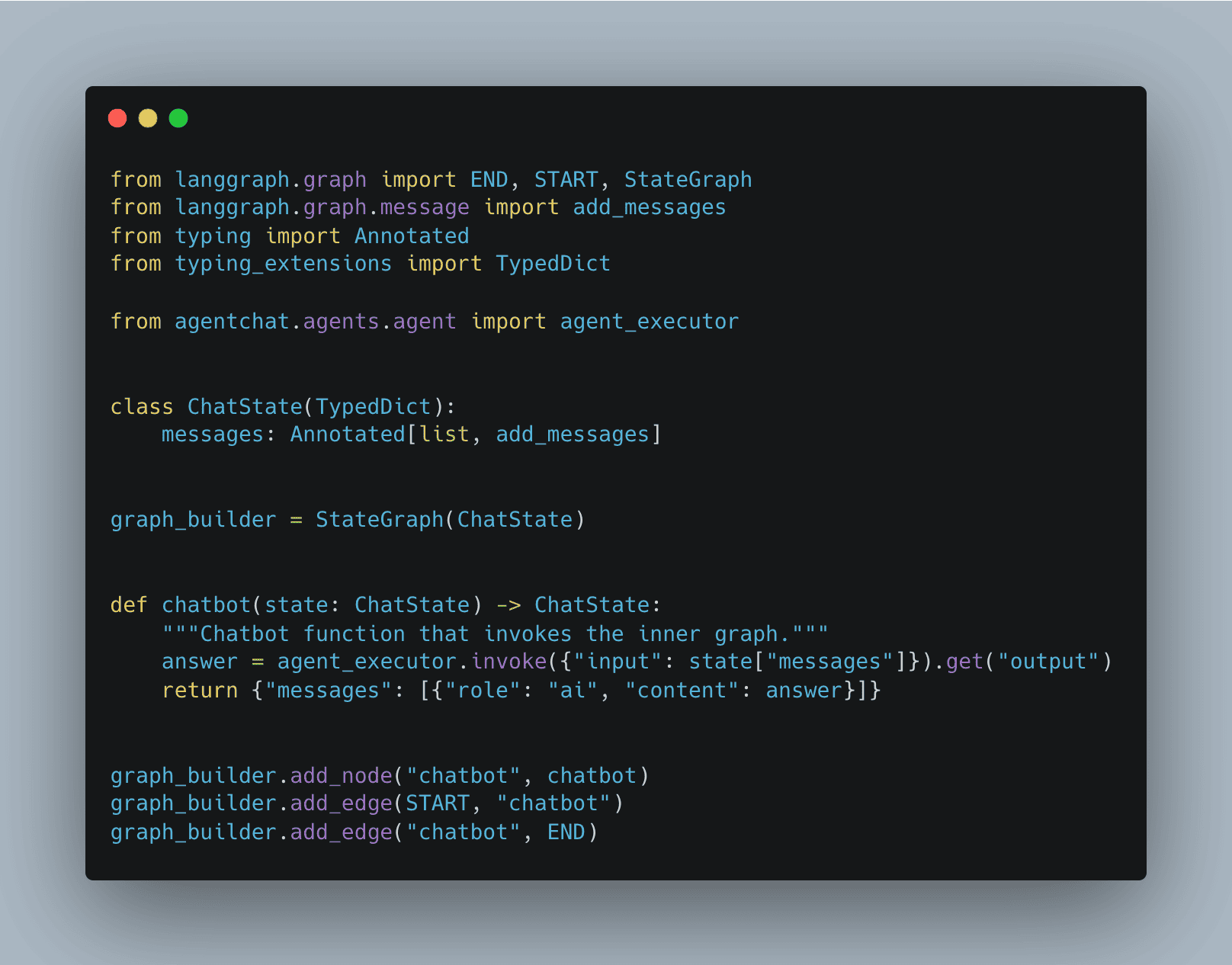

Define the Interactive Chat

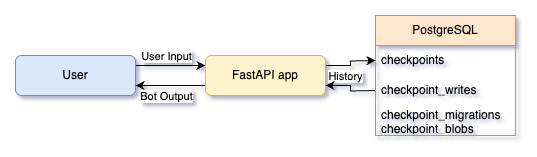

After defining the agent, we build an interactive graph to control the chatbot flow.

Here we define a simple graph with one node: the chatbot itself. It takes the conversation state, sends it to the agent, and returns the agent’s response.

Step 2: Setting up the FastApi

We’ll set up a basic FastAPI app with one endpoint to interact with the chatbot. The key part here is using a lifespan event to handle the connection to PostgreSQL, which will be used to manage the chatbot’s memory.

lifespan in FastAPI is a way to manage startup and shutdown events for your application. It’s useful for setting up resources like database connections when the app starts and cleaning them up when it shuts down.

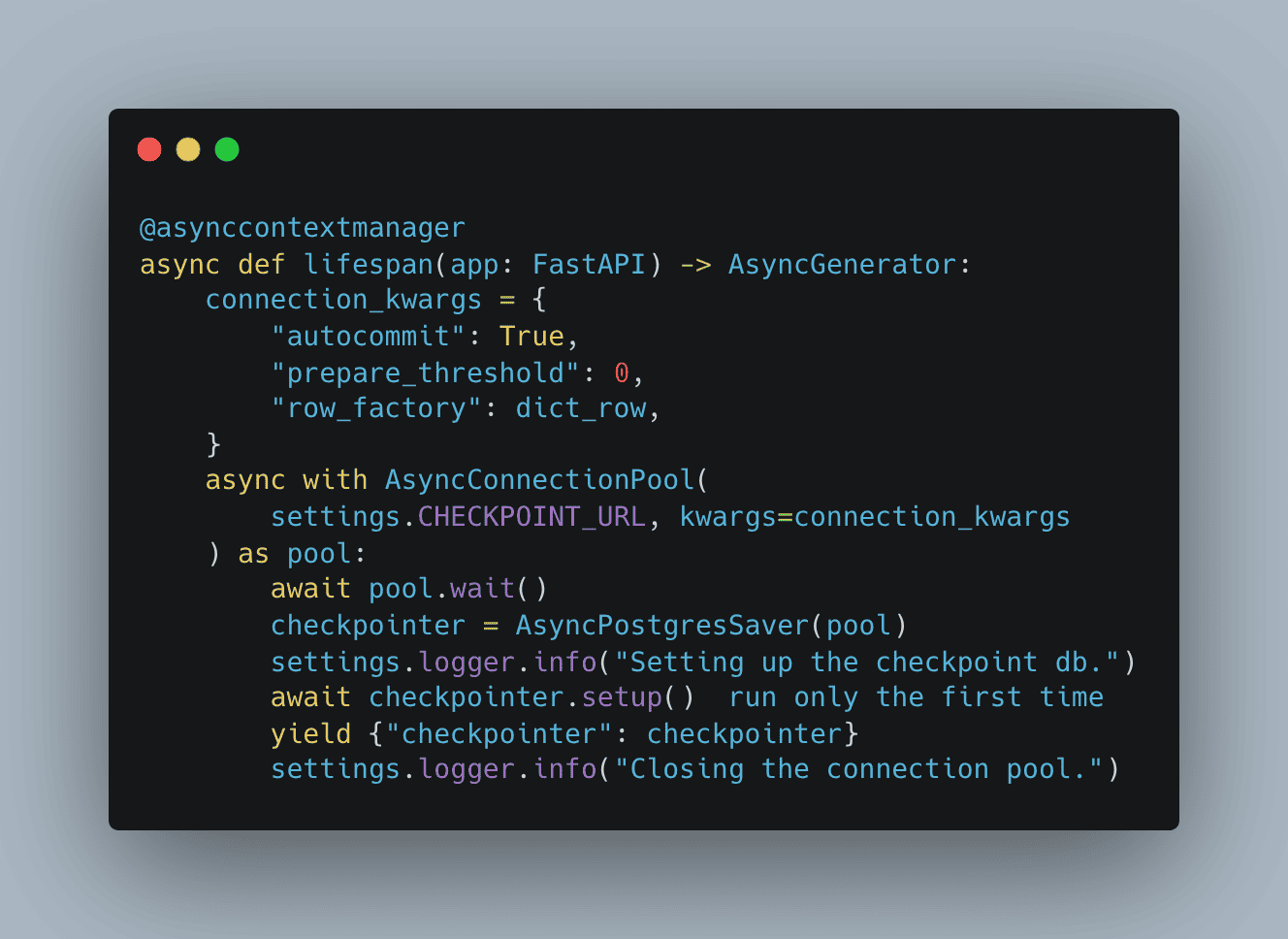

Setting up the lifespan:

Let’s break down what’s happening in this lifespan function.

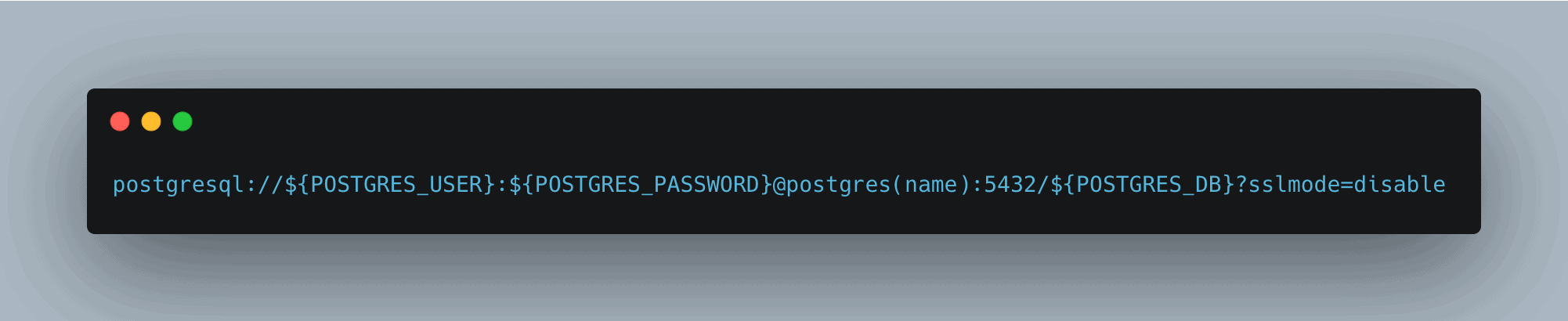

First, CHECKPOINT_URL is the PostgreSQL connection string. It follows the format:

Then, an asynchronous connection to the database is created using AsyncConnectionPool, and the checkpointer is initialized with AsyncPostgresSaver.

The line await checkpointer.setup() ensures that the necessary tables or schema are created in the database. This usually runs only the first time, or whenever the schema isn’t already in place.

The yield statement is part of FastAPI’s lifespan context—it lets you pass shared resources (like checkpointer) into the app’s state so they can be accessed in your endpoints.

Once the app shuts down, the connection pool is automatically closed.

Setting up Endpoint

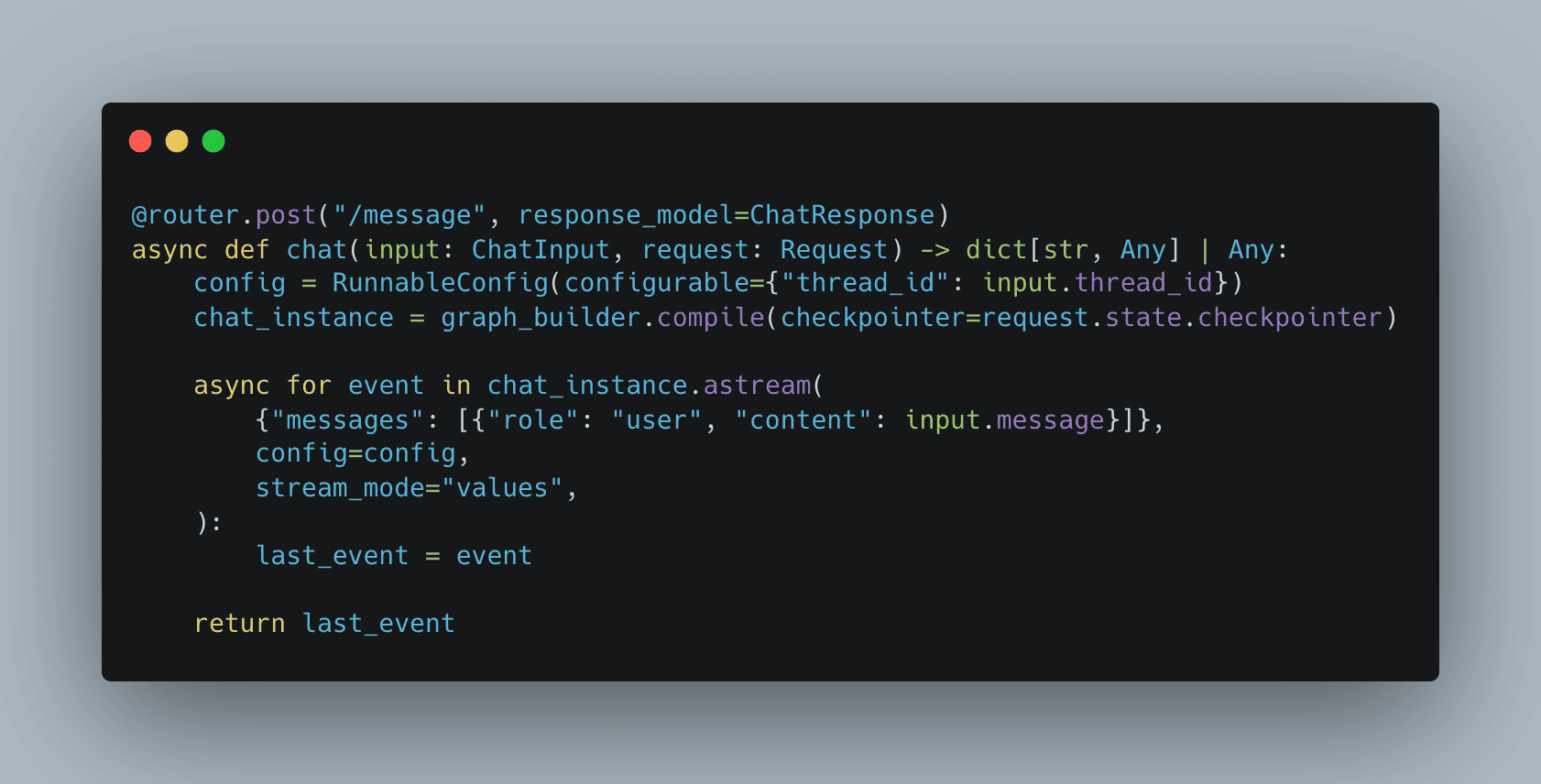

We will setup a single endpoint to communicate with the chatbot.

Let’s break down what’s happening here.

This endpoint receives a user message and uses the chatbot to generate a response. The checkpointer, which was set up during the app’s startup, is pulled from request.state and passed into graph_builder.compile(). This gives the chatbot access to persistent memory through PostgreSQL.

The RunnableConfig includes the thread_id, letting the chatbot know which conversation thread it’s working with. We then call chat_instance.astream() to send the message and stream back the response. Inside the loop, we keep track of the last event, which will be the last snapshot of the memory.

Passing lifespan into FastAPI app

We pass the lifespan function into the FastAPI app like this:

This tells FastAPI to run our custom startup and shutdown logic when the app launches and exits.

Step 3: Set Up Docker with Docker-Compose

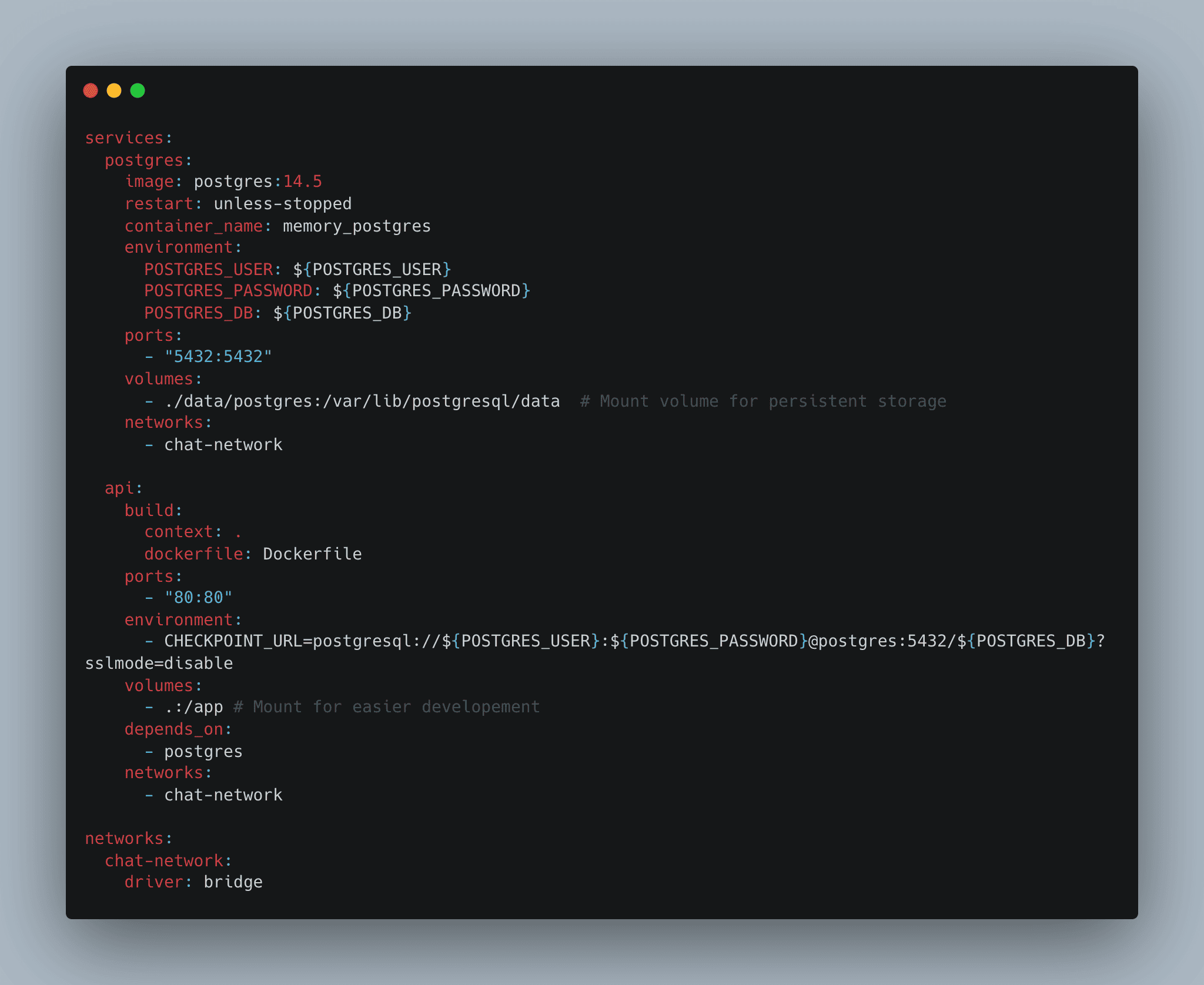

This configuration defines two services: postgres for the database and api for the FastAPI app. The PostgreSQL service uses a persistent volume to store data and is linked to the api service, which connects to it via the CHECKPOINT_URL. The FastAPI app is built from a local Dockerfile and can communicate with PostgreSQL over a shared Docker network, chat-network. This setup ensures that both services are isolated, can persist data, and are linked together for smooth interaction.

Step 5: Running the Chatbot

Start the services with:

Once running, visit http://localhost/docs to interact with the chatbot API.

Conclusion

In this article, we’ve set up a basic chatbot application using Docker, FastAPI, and PostgreSQL. We used the lifespan function to manage the database connection and ensure the chatbot’s memory is stored persistently. The docker-compose.yml file ties everything together, running both the PostgreSQL database and the FastAPI app, and ensuring they can communicate over a shared network. This setup offers a simple, reliable foundation for building a scalable chatbot while keeping things easy to deploy and develop with Docker.