Accessing HR data in a tech company is rarely straightforward. You often need special permissions, manually exported spreadsheets, or custom integrations. So, what if you could build a fully automated HR data product—with zero ongoing HR effort—using open-source tools and just a bit of engineering?

That’s exactly what we did. In this post, I’ll walk you through how we built an internal HR data pipeline that powers real-time dashboards, automates time-off syncing, supports offboarding processes, and integrates seamlessly with tools like GetDX—all while requiring no vendor licenses or new platforms.

TL;DR

We built a daily automated HR data pipeline using just a HiBob service account and a few open-source tools. This pipeline:

- Provides accurate, up-to-date employee and time-off data

- Powers dashboards, internal systems, and 3rd-party integrations

- Enables self-service HR analytics and reporting

- Improves offboarding processes

- Runs entirely on GitHub Actions, without the need for an ETL platform

Let’s dive into how we did it.

Stack Overview: Python, dbt Core, and GitHub Actions

Our pipeline is built using simple, open-source, and well-supported tools:

- Python — to fetch data from HiBob’s API

- dbt Core — to transform and document our HR models

- GitHub Actions — to schedule and automate the whole process

The only requirement from our HR team was to provide a HiBob service account with access to specific, non-sensitive fields.

🚀 Why It Works So Well

- Minimal Dependencies: Just Python and requests. No Airflow, no vendor lock-in.

- Maximum Control: We can modify endpoints, add filters, or change outputs in minutes.

- CI/CD Friendly: It fits perfectly into GitHub Actions, which runs the script daily and notifies us on failure via Google Chat.

🐍 Meet the Workhorse: bob.py

At the core of our automated HR data pipeline is a deceptively simple but powerful Python script: bob.py. This little script does the heavy lifting of turning HiBob’s HR platform into a structured, analytics-ready data source — with no ongoing manual effort and zero third-party ETL tools involved.

So, what does it actually do?

✅ 1. Authenticates Securely with HiBob’s API

Using a service account provided by HR, the script connects to HiBob’s API over HTTPS. Credentials are stored securely via GitHub Secrets, and passed into the script at runtime via environment variables in our GitHub Actions pipeline. This keeps everything secure and compliant, while also making it CI/CD-friendly.

🔄 2. Pulls From Multiple Endpoints

The script connects to two key HiBob endpoints that were fulfiling the scope of this project

/people: for a complete list of current and past employees/people/timeoff: for daily time-off data (who is off and when)

By combining these endpoints, we get a full picture of HR activity — from organizational structure to leave patterns — without having to rely on any HR exports or spreadsheets.

📦 3. Handles Pagination & Fails Gracefully

HiBob’s API responses are paginated, and bob.py handles this elegantly under the hood. It fetches the results of the API call and transforms them into newline-delimited JSON (.jsonl) files. This format is simple, lightweight, and ideal for downstream ingestion into your warehouse or lakehouse.

It also fails gracefully, as any errors in the response are exposed to the logs of the script directly visible in the Github action run page.

🧰 4. Output Ready for dbt + Analytics

The resulting .jsonl files are dropped into a known location and processed immediately by the next step in our GitHub Actions workflow. These raw files are used as the base for our r_ dbt models (like r_bob_time_off.sql and r_bob_employees_list.sql ), which standardize and transform the data for analytics.

This separation of concerns — Python for extraction, dbt for modeling — keeps our pipeline modular, testable and easy to maintain.

Code Snippets

bob.py

Upload on Snowflake

Finally we use snowflake.sqlalchemy to upload our raw (just transformed into JSON) responses on Snowflake in our RAW schema

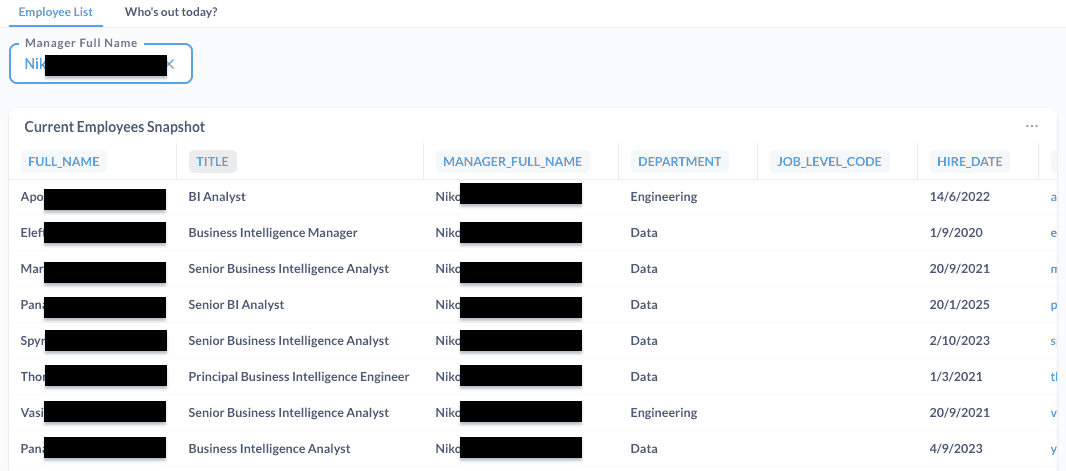

Full Employee Visibility, Every Day

A key part of our pipeline is daily employee snapshots — your single source of truth for who works here, in what role, and under what status.

For the data transformation we of course use dbt, which if you are working with data, you must at least have heard of!

✅ dbt models that Matter

- r_bob_employees_list.sql: A raw snapshot of all employees, enriched with fields like manager, location, job level, and department

- t_employee_historical_list.sql: A historical dimension table that lets you see exactly what the org looked like at any point in time

- t_employee_list.sql: A clean, ready-for-analytics layer that powers headcount, attrition, onboarding/offboarding, and team structure reports

With this setup, HR and team leads no longer need to dig through spreadsheets or chase manual reports.

Models

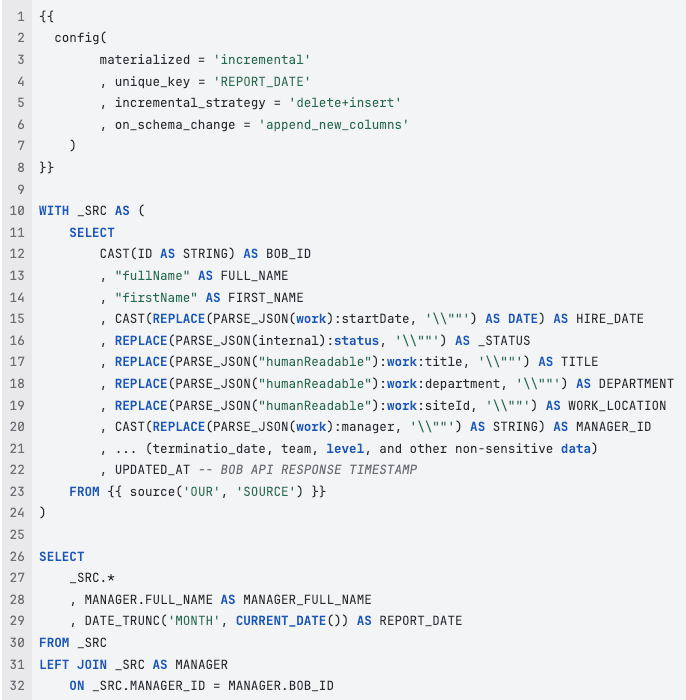

r_bob_employees_list

We store the daily people endpoint results in an incremental table which parses the information stored by python properly on curated columns:

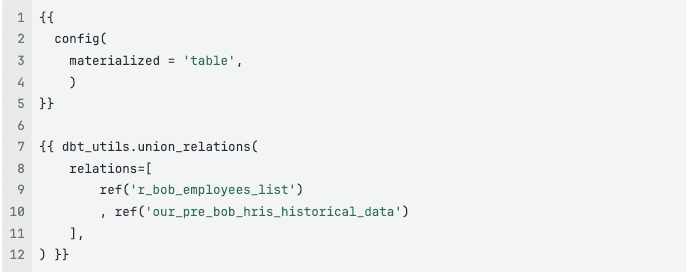

t_employee_historical_list

Since we migrated from another HRIS to Bob, we need to append our data in our exposed dataset in order to preserve history

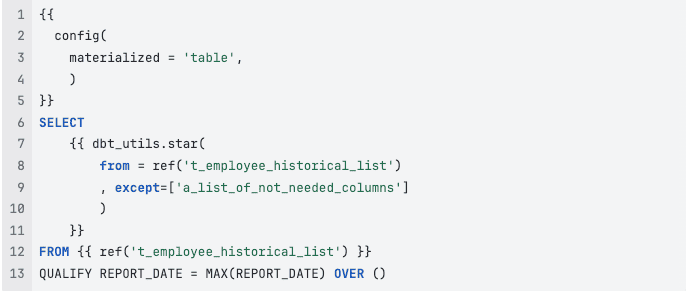

t_employee_list

This is the current month snapshot of all employees that ever worked on our company. Only some of them have _STATUS = Active

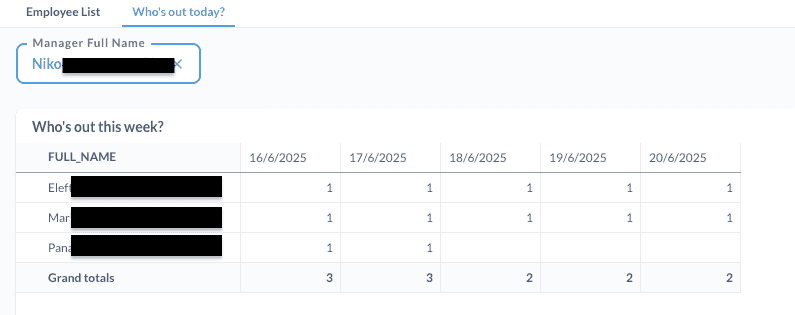

Time-Off Data: From API to Analysis in a Snap

One of the most impactful pieces of this pipeline is our handling of employee time-off data.

🔍 Python Does the Heavy Lifting

The bob.py script connects to the HiBob API using a service user’s credentials and pulls this key datasets:

timeoff/requests: High-level PTO requests with metadata such as start/end dates, labels, and approval status

The script handles pagination and error recovery, and drops the data into clean JSON lines — perfect for downstream use.

🧠 dbt Brings Structure and Intelligence

We layered our dbt models like this:

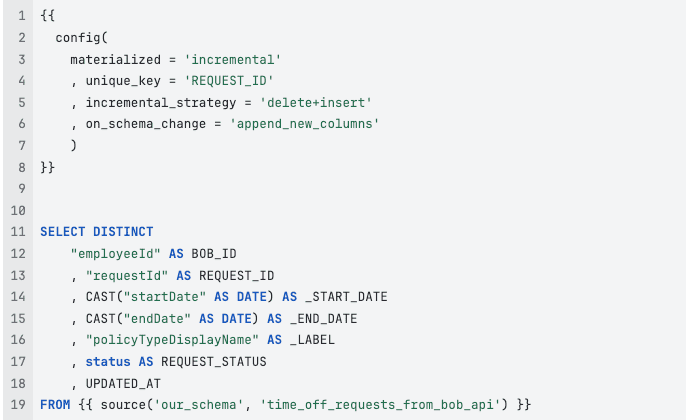

- r_bob_time_off_requests.sql: Staging models that clean and prepare the raw API data incrementally updated to get the newer status of any

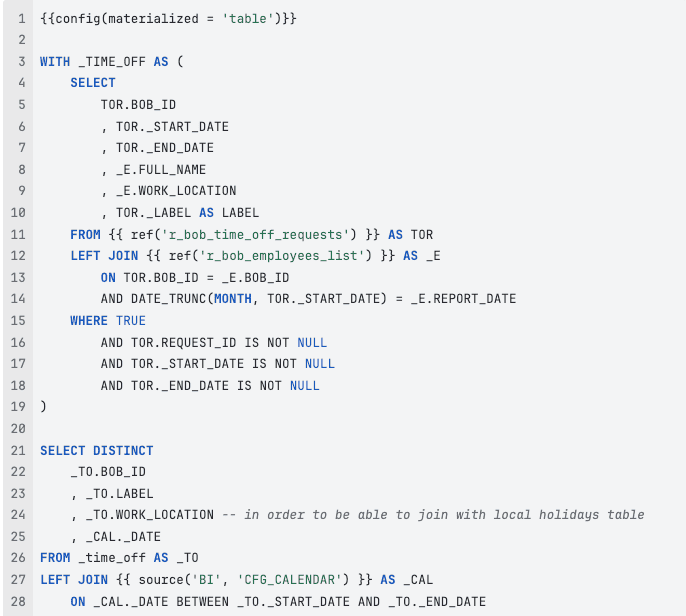

request_id - r_bob_time_off.sql Since a request is just two dates:

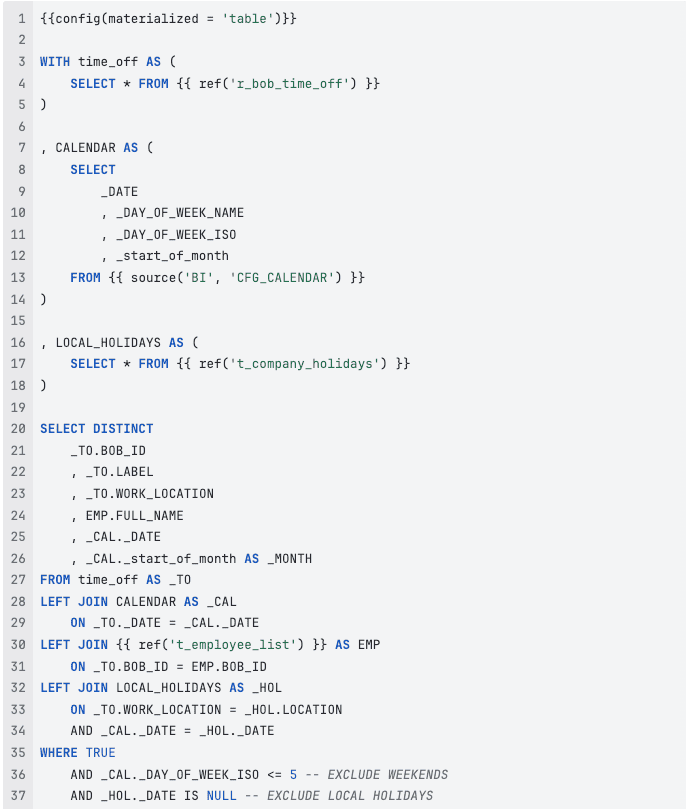

start_dateandend_datewe spread all the days in between in one row per each day (for eachrequest_id) - t_time_off.sql: Our transformed model that:

- Joins with a calendar table to identify Weekends

- Joins with a Company Holidays table that has per location days that people are not expected to show up for duty and includes, local holidays and company wellness days off

- Prepares time-off entries for direct analysis and integration, showing which days from the

request_idshould be debited on the leave accrual of each employee

💡 What This Enables

- Up-to-date PTO dashboards without needing HR exports

- Easy cross-referencing of leave with projects, team capacity, and org charts

- Automated feeds to platforms like GetDX to minimize onboarding overhead

Code Snippets

r_bob_time_off_requests

This is an incremental table updating the latest status of each request_id:

r_bob_time_off

This is the code that spreads the request_ids into separate rows for each day between the start and end date of the request

t_time_off

This is the curated table that shows, all the days that each employees actually uses a day off from their accrual which means that is between the start and end dates of their request but it’s not a weekend or a local holiday.

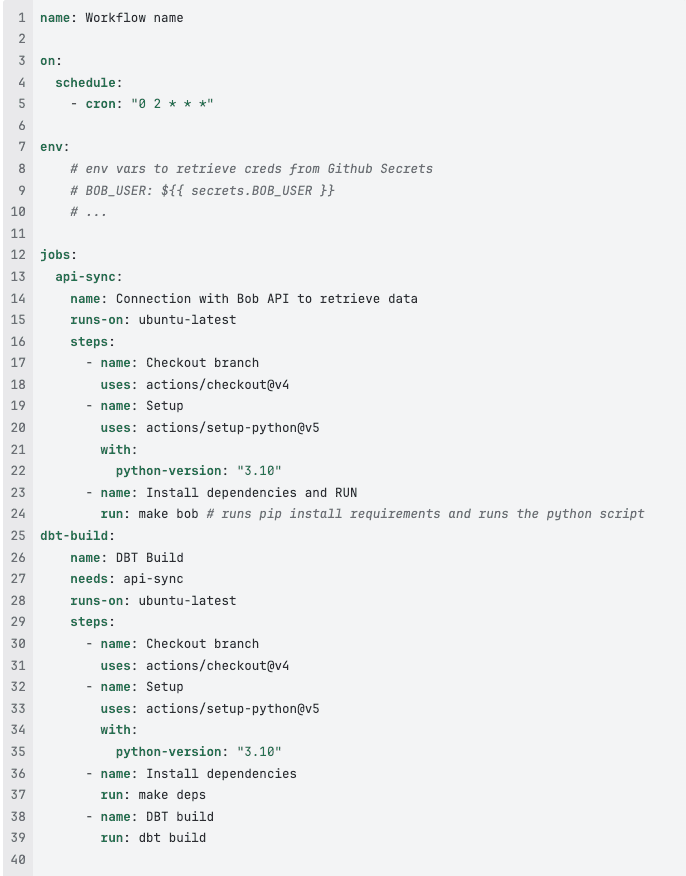

CI/CD

A simple workflow file was created in the .github/workflows/ folder with a simple structure in order to run a daily flow of calling the Bob API endpoints, storing them on snowflake using python, and then run the dbt build command on the fresher data

et voilà!

🏁 The Takeaway: A Small Script with a Big Impact

This project isn’t just a data pipeline — it’s a blueprint for how lean teams can build real, scalable data products without waiting on massive buy-in or heavyweight tools.

In just a few weeks, with minimal HR involvement, we:

- Built trust in HR data through transparency and consistency

- Unlocked critical metrics for workforce planning, team capacity, and compliance

- Created a foundation for other systems like GetDX, performance review tooling, and internal dashboards to consume this data automatically

- Helped shift the culture toward self-serve analytics and data access

This is what data democratization looks like in practice.

And the best part? Anyone can replicate it.

You don’t need an enterprise ETL platform.

You don’t need months of coordination.

You just need Python, dbt, a CI pipeline, and a bit of curiosity.